How scientific writing lost its soul

Whenever I'm reading papers written many decades ago, I'm immediately struck by how different they feel.

Take this introduction from one of Albert Einstein's papers I was reading yesterday published in 1928, :

"Riemannian Geometry has led to a physical description of the gravitational field in the theory of general relativity, but it did not provide concepts that can be attributed to the electromagnetic field. Therefore, theoreticians aim to find natural generalizations or extensions of Riemannian geometry that are richer in concepts, hoping to arrive at a logical construction that unifies all physical field concepts under one single leading point. Such endeavors brought me to a theory which should be communicated even without attempting any physical interpretation, because it can claim a certain interest just because of the naturality of the concepts introduced therein."

He started a follow-up paper titled New Possibility for a Unified Field Theory of Gravitation and Electricity published in the same Session Report of the Prussian Academy of Sciences 1928, as follows:

"Some days ago I explained in a short note in these reports, how by using a n-bein field a geometric theory can be constructed that is based on the notion of a Riemann-metric and distant parallelism. I left open the question if this theory could serve for describing physical phenomena. In the meantime I discovered that this theory - at least in first approximation – yields the field equations of gravitation and electromagnetism in a very simple and natural manner. Thus it seems possible that this theory will substitute the theory of general relativity in its original form."

Yes, Einstein was a masterful thinker and writer.

But pull up virtually any paper written a hundred or so years ago and you'll be surprised by just how readable it is.

The tone is unpretentious, it's clear why the work was done, and even as a physicist not specialized in the topic of the paper you can follow along.

Many papers from back then look interesting because they cover deep, fundamental problems. Also most of them describe a single idea.

The general frame for writing papers back then clearly was: This is the problem I'm wrestling with, here are some thoughts and my attempt to solve it.

There were also many papers published back then that were simply saying: Here's something interesting I noticed.

No need to make it seem like something bigger by wrapping it in a bunch of fluff.

Letters published in Nature or Physical Review Letters, for example, back then were like that.

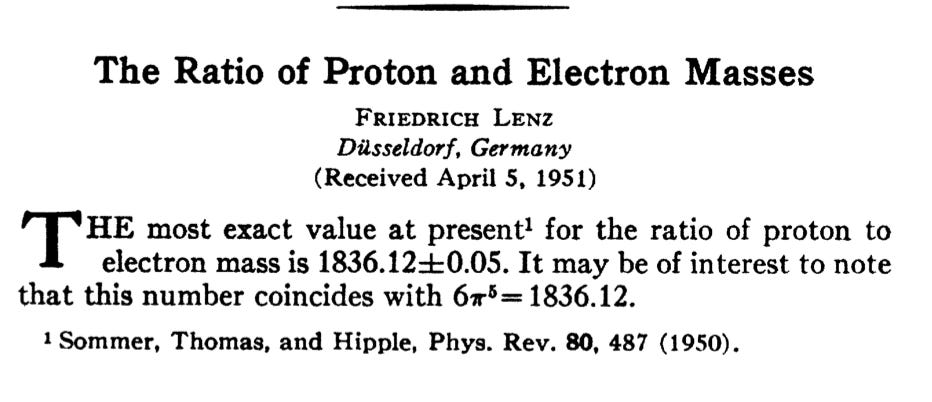

An extreme example of this type of paper is The Ratio of Proton and Electron Masses by Friedrich Lenz, published in 1951 in Physical Review.

It's just two sentences long:

That's the whole thing.

When reading old papers, you can feel that people were just grappling to make sense of nature and oftentimes simply had fun.

Papers had rough edges, included personal opinions, and often described unfinished work.

It didn’t matter much who submitted the paper as long as the ideas seemed reasonable and were interesting.

Note, for example, how the screenshot of the paper above doesn’t mention any academic affiliation.

For most of scientific publishing’s history, “there was more journal space than there were articles to print”.

Publishing something was a way to invite feedback and get new input from fellow researchers.

Private communication between researchers was common too, of course. But publishing a paper in a journal added an element of serendipity. Someone you din’t know might send you that one missing puzzle piece.

If you look through the archives of Einstein’s writing, you can see that he regularly wrote letters to the authors of papers sharing thoughts, giving feedback, and pointing out errors in a friendly way.

As Étienne Fortier-Dubois put it, journals like Nature were more similar to Twitter than to modern research journals.

“You could write to Nature, be published within a week, and read the replies to your letter within two weeks.”

Journals like Nature were read by people wanting to stay on top of the progress made on important science questions.

Papers were meant to be read and people were actually reading them.

This is how things worked back then.

Crazy, I know.

The contrast couldn’t be any bigger if you pull up any paper published recently.

Modern papers read like they were written by a political committee.

The tone is cold, impersonal, overly careful, and professional.

There are no rough edges or opinions. All the work is finished and polished.

Even with a PhD in the field, it’s usually impossible to follow along and the motivation why the work was done is shallow at best.

The work is usually highly incremental, so you would need to read dozens of earlier papers before you could even start to grasp why the paper in front of you is important.

I very much doubt anyone is sitting down on Sunday mornings with a cup of coffee to read the latest issue of Nature.

Instead of a single atomic idea, most papers contain a multitude of claims.

For example, Dorothy Bishop, professor of Developmental Neuropsychology at Oxford University, describes in her blog:

“I recently read a paper that reported, all within the space of a single Results section about 2000 words long, (a) a genetic association analysis; (b) replications of the association analysis on five independent samples (c) a study of methylation patterns; (d) a gene expression study in mice; and (e) a gene expression study in human brains

Nature reported in 2023 that it now takes a median time of 268 days between submission and acceptance. By the time a paper gets published the researchers typically have long moved on and are thinking about completely different topics.

When someone reaches out nowadays to the author of a paper, it’s typically to tell them they forgot to cite one of their papers.

What Happened?

Let’s work backwards step by step.

This is our starting point:

The tone in modern papers is impersonal and dry. The writing lacks style.

Only finished, polished work is published. No work-in-progress or opinions are included.

The work is usually incremental instead of fundamental.

It’s impossible to casually read modern papers, even as an expert. One reason, in addition to the dense, jargon-laden writing style, is the inflation of the number of claims per paper.

We know that this isn’t simply the unchangeable nature of the genre of scientific publishing. Things used to be different a hundred years ago.

So what explains these changes?

One data point is the explosion in the number of authors per paper.

A hundred years ago, single-author papers were the norm. Nowadays, they’ve become virtually extinct.

It’s not surprising the tone in scientific papers became less personal as a result.

A second development is the introduction of peer review.

This happened much more recently than most people assume.

Nature introduced peer review only in 1973. The prestigious medical journal The Lancet started reviewing papers in 1976.

Only one of the 300 or so papers Albert Einstein published was peer-reviewed.

Unlike what most people outside of academia assume, peer review does not mean serious fact-checking. Reviewers do not replicate experiments or check calculations step by step.

Peer review’s primary function is to check a drafts’ “suitability for publication”. Whatever that means is to a large extent up to the referee to decide.

There is little direct evidence that peer review improves the quality of published papers.

But what peer review does is to make sure everyone’s main focus becomes making their draft “peer review proof”.

Peer reviewers are typically not paid. Often you’re dealing with an already overworked and underpaid postdoc.

So they’ll happily jump at the first reason they can find to quickly reject a given draft.

Bulletproofing your draft requires carefully removing anything that can be criticized.

No opinions, no works in progress allowed. (The referee will tell you to come back when the work is finished.)

You’re definitely not allowed to express any kind of confusion. (The referee will tell you to come back when you understood everything properly.)

No fun, no humor, no analogies allowed. This is serious business now. (Yes, papers do get rejected if they are “too fun”.

A smart strategy is also to cram as many claims as you can into your paper. This makes it much harder for the referee to reject the draft as “not relevant” or “not important enough”, since they have to write something about each claim.

The third development that went hand-in-hand with the other two is that researchers started caring about citation counts.

The Science Citation Index (published as a book) was launched by information scientist Eugene Garfield 1964.

This was a huge turning point since it allowed researchers to see how often scientific papers were being cited, and by whom.

Previously, hardly anyone ever thought about citations as a thing of its own since there was no way to look at them beyond the individual listings in each paper.

But once the cat was out of the bag, there was no going back.

Citations became an objective.

Garfield also invented the Journal Impact Factor to measure average citation rates.

Once people started caring about citation counts, they naturally also started thinking about what journal would help them generate a maximum number of citations:

“Suddenly where you published became immensely important. . . . Almost overnight, a new currency of prestige had been created in the scientific world.”

Savvy entrepreneurs like Ben Lewin, who founded Cell in 1974, happily put fuel to the fire.

By rejecting far more papers than they accepted, journals became selective clubs that researchers wanted to become a part of.

Older journals had to follow the trend.

The acceptance rate in Nature decreased from 35% of submitted papers in 1974, to around 12.5% in 1980 to 8% in 2024 (and most papers “are not even deemed worthy of a submission to Nature by their authors”).

Journals were no more “passive instruments to communicate science” but became active players.

The invention of scientific prestige metrics — measured by citation counts and impact factors and reinforced through editorial selection and peer review —completely transformed the dynamics of the game.

Within a few years, metrics such as the ones invented by Eugene Garfield became the primary objective.

Initially, this seemed like a good development. Having directly measurable metrics seems much fairer and effective than relying on some vague sense of credibility, authority, and importance.

Soon they were used to make funding, hiring, and promotion decisions.

But then, of course, the Cobra effect kicked in.

As it always happens, when a measure becomes a target, it ceases to be a good measure.

The resulting perverse incentives are well-documented.

So, in summary:

Papers used to be written to be read.

Now they are written to be cited and to be added to CVs.

(Yes, people do cite papers without reading them all the time.)

Every issue with modern research papers can be traced back directly to these perverse incentives.

For example, why is the number of authors per paper exploding?

Well, co-authoring is an effective way to pad your publication list:

If you team up with a colleague doing similar work and write two half-papers instead, both parties end up with their names on twice as many papers, but with no increase in workload. Find a third researcher to join in and you can get your name on three papers a year. And so on.

Anything that isn’t promising to bring in citations is no longer deemed worth doing.

It’s why no one shares works in progress or takes the time to explain their thinking properly.

This is also why every attempt to fix scientific publishing by founding a new journal that values good writing and clear explanations will fail.

Researchers don’t cite the clearest explanation but whoever published the claim first (or is the most famous).

They also don’t work on whatever they think is most interesting or important. Instead, to have any chance of accumulating a meaningful number of citations in time scales funding and hiring committees operate on, you have to work on topics everyone else is working on. This is why virtually all research published nowadays is incremental instead of creative and fundamental.

Co-authoring “peer review-proof” papers stuffed with incremental claims is how you maximize your citation count.

It’s what you have to do to win in modern academia.

And sadly, as in any game like this, the careerists are outcompeting everybody else.

So I don’t think it’s a coincidence these developments went hand in hand with the slowdown of scientific progress.

But I will fight anyone who claims this was inevitable or that the change is irreversible.

How to Fix It

There is absolutely no reason why “true seekers”, can’t tackle fundamental problems in creative ways and write about their findings, share their confusion, the roadblocks they encounter, in a personal and readable way anymore.

Incentives are an incredibly powerful force. But if you opt out from the game, they lose their power.

No one outside of academia cares about stuff like the h-index or impact factor.

Peer review, polished multi-author "blockbuster papers," selective journals, citation counting, and researchers as a class of society entirely removed from the rest, are all modern inventions.

Science progressed perfectly well for most of history before they were introduced.

Most key discoveries were made by what we would nowadays call amateur scientists and published without peer review.

Marie Curie only got an official university job after she got famous.

Einstein, of course, was a patent clerk during his annus mirabilis.

Antonie van Leeuwenhoek sold drapes to make a living and only in his pastime invented microbiology.

Thomas Bayes was a priest.

Michael Faraday only had minimal formal education.

Ada Lovelace had no official "computer science" training (it didn’t exist yet!).

Charles Darwin was a medical-school dropout and theology student.

The guy who founded genetics, Gregor Mendel, was a monk.

Norman Lockyer, the founder of Nature, was dabbling in astronomy in his spare time purely as an intellectually productive hobby. Among other things, he co-discovered and named the element helium.

George Green, who discovered a key concept at the heart of Quantum Field Theory (Green's functions), was a 19th-century miller.

George Boole, was a schoolteacher who self-studied mathematics.

The claim that ideas got harder to find and now it requires highly specialized, full-time research to make any progress is simply false.

All it takes to turn things around is that more people wake up to the fact that they too can do science.

Once enough people participate, the current system will become obsolete.

A century from now, people will look back at today's 'professional science' and laugh.

How could people get so trapped by that ultimately meaningless status game?

Until then, let them play.

And let us get back to grappling with nature, not metrics.

Thank you for sharing

I had to think of Shannon's paper A Mathematical Theory of Communication as I was reading your piece. I don't think that style of paper is completely lost - I read a lot of papers, and I've read some which capture the old way. But it tends to be reserved for people who are already at the top and no longer need to play the game.